If your RAG app sounds confident but answers the wrong thing, you don’t have a “smart AI.”

You have a dumb retriever feeding the model weak context.

Table of Contents

That’s the hidden truth of modern RAG:

The LLM is rarely the problem. Retrieval is.

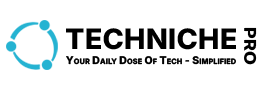

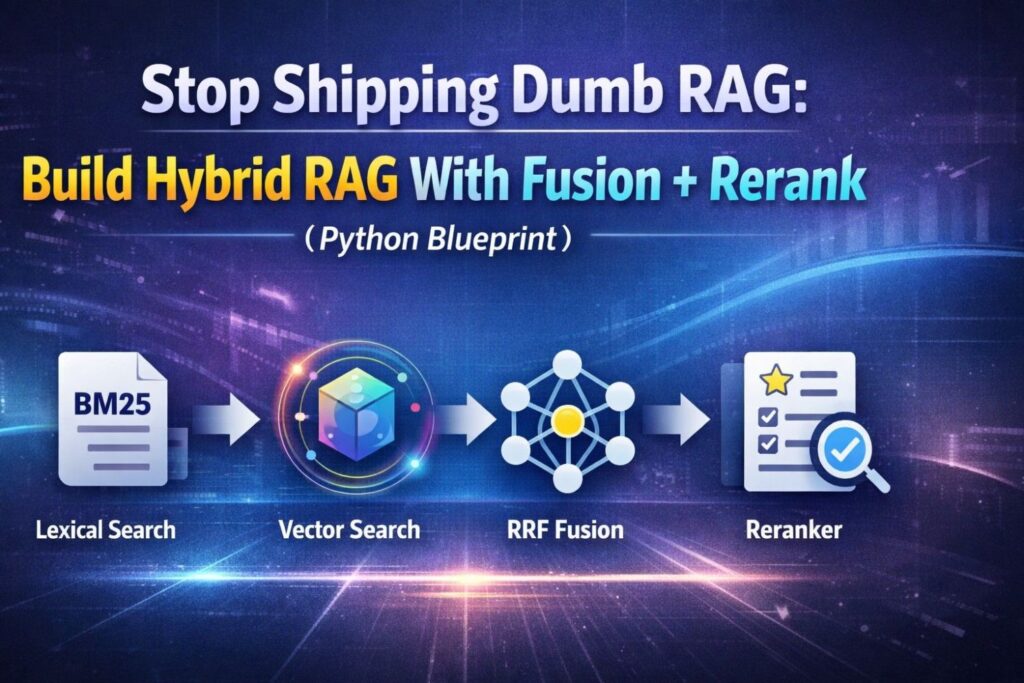

And the fastest way to fix retrieval—without turning your system into a research project—is Hybrid RAG:

BM25 + embeddings + fusion + reranking.

Hybrid RAG is the difference between:

- “I think the answer is…” ❌

and - “Here’s the exact paragraph that proves it.” ✅

Hybrid RAG works because it uses two brains:

- Lexical search (BM25) for exact words, IDs, numbers, clauses, and literal matching

- Vector search (embeddings) for meaning, paraphrases, synonyms, and fuzzy intent

Then it merges both lists using fusion and sorts the final candidates using reranking.

This article gives you a clean, production-style Hybrid RAG blueprint in Python you can copy and adapt.

What Hybrid RAG actually means

Hybrid RAG is not “RAG + embeddings.”

Hybrid RAG is a multi-stage retrieval pipeline:

- BM25 retrieval (fast, precise, literal)

- Vector retrieval (semantic, robust to paraphrases)

- Fusion (combine both result lists into one ranking)

- Rerank (a stronger model re-orders the shortlist)

- Prompt the LLM using the best evidence

This is the same core idea behind hybrid search systems described in Weaviate’s documentation.

Why “vector-only RAG” breaks in real life

Vector search is great at meaning.

But real users don’t always ask in “meaning.”

They ask with:

- exact phrases

- error messages

- product names

- abbreviations

- part numbers

- quotes

- short queries like: “refund policy 14 days”

In those cases, BM25 wins because BM25 cares about literal tokens.

Vector-only RAG fails in a very specific way:

- it retrieves something related

- the LLM fills gaps

- the answer becomes fluent fiction

Hybrid RAG avoids that trap by forcing the system to retrieve both:

- what’s exactly matching

- what’s semantically relevant

This “lexical + semantic” pairing is the classic hybrid search pattern described by Elastic.

The Hybrid RAG blueprint (simple and deadly effective)

A strong Hybrid RAG pipeline looks like this:

Step A — Candidate Generation

- BM25 top-k (e.g., 50)

- Vector top-k (e.g., 50)

Step B — Fusion

- Merge both lists into one score/rank

- Best default: Reciprocal Rank Fusion (RRF)

Step C — Rerank

- Take the merged top-N (e.g., 100)

- Rerank with a stronger model (cross-encoder)

- Keep the top 8–12 chunks for the LLM

If you do only one upgrade to your RAG system this year, do this.

Fusion: the trick that makes Hybrid RAG feel “magical”

Here’s the problem:

BM25 scores and vector similarity scores are not comparable.

They live on different planets.

Fusion solves this.

The best practical fusion method: Reciprocal Rank Fusion (RRF)

RRF doesn’t care about score scales.

It only cares about rank positions.

So if BM25 ranks a document #2 and vector ranks it #7, that document gets a strong combined vote.

RRF is famous because it’s simple, stable, and works shockingly well across retrieval systems.

Reranking: the “truth filter” your RAG is missing

Even Hybrid RAG can retrieve “almost right” chunks.

That’s where reranking changes everything.

A reranker (usually a cross-encoder) takes:

- the query

- a candidate chunk

and predicts: how relevant is this chunk to this query?

Reranking is how you turn:

- “kind of relevant”

into - “this chunk answers it directly”

Think of retrieval like casting a wide net.

Reranking is choosing the best fish.

The Python Blueprint: Hybrid RAG with BM25 + Vector + RRF + Rerank

Below is a clean, single-file blueprint that implements:

- BM25 retrieval

- embedding-based retrieval

- RRF fusion

- reranking

- final context selection

It’s designed to be readable and easy to swap into your own data sources.

# hybrid_rag_blueprint.py

# ------------------------------------------------------------

# Hybrid RAG = BM25 (lexical) + embeddings (semantic)

# + RRF fusion + reranking

#

# This is a blueprint file: plug in your own documents, storage,

# chunking, and LLM prompting.

# ------------------------------------------------------------

from __future__ import annotations

from dataclasses import dataclass

from typing import List, Dict, Tuple

import math

# -----------------------------

# Data model

# -----------------------------

@dataclass(frozen=True)

class DocChunk:

id: str

text: str

# -----------------------------

# BM25 (minimal implementation)

# -----------------------------

# If you already use Elasticsearch/OpenSearch, you can replace this

# with a real BM25 query. This lightweight BM25 is fine for demos.

# For production, use a proper index.

# -----------------------------

class TinyBM25:

def __init__(self, docs: List[DocChunk], k1: float = 1.5, b: float = 0.75):

self.docs = docs

self.k1 = k1

self.b = b

self.doc_tokens: List[List[str]] = [self._tokenize(d.text) for d in docs]

self.doc_lens = [len(toks) for toks in self.doc_tokens]

self.avgdl = sum(self.doc_lens) / max(1, len(self.doc_lens))

self.df: Dict[str, int] = {}

for toks in self.doc_tokens:

for term in set(toks):

self.df[term] = self.df.get(term, 0) + 1

self.N = len(docs)

def _tokenize(self, s: str) -> List[str]:

# Cheap tokenizer: good enough for the idea.

# Replace with better tokenization as needed.

return [

w.lower()

for w in "".join(ch if ch.isalnum() else " " for ch in s).split()

if w.strip()

]

def _idf(self, term: str) -> float:

# Standard BM25-ish IDF

df = self.df.get(term, 0)

return math.log(1 + (self.N - df + 0.5) / (df + 0.5))

def search(self, query: str, top_k: int = 10) -> List[Tuple[DocChunk, float]]:

q = self._tokenize(query)

scores: List[Tuple[int, float]] = []

for i, toks in enumerate(self.doc_tokens):

tf: Dict[str, int] = {}

for t in toks:

tf[t] = tf.get(t, 0) + 1

score = 0.0

dl = self.doc_lens[i]

norm = (1 - self.b) + self.b * (dl / self.avgdl)

for term in q:

if term not in tf:

continue

idf = self._idf(term)

freq = tf[term]

score += idf * (freq * (self.k1 + 1)) / (freq + self.k1 * norm)

scores.append((i, score))

scores.sort(key=lambda x: x[1], reverse=True)

out = [(self.docs[i], s) for i, s in scores[:top_k] if s > 0]

return out

# -----------------------------

# Dense embeddings (plug-in)

# -----------------------------

# In production you’ll use a real embedding model + vector index (FAISS, Milvus, etc.)

# This interface keeps the pipeline clean.

# -----------------------------

class Embedder:

def embed(self, texts: List[str]) -> List[List[float]]:

raise NotImplementedError("Plug in your embedding model here.")

def cosine(a: List[float], b: List[float]) -> float:

dot = sum(x * y for x, y in zip(a, b))

na = math.sqrt(sum(x * x for x in a)) or 1e-9

nb = math.sqrt(sum(y * y for y in b)) or 1e-9

return dot / (na * nb)

class TinyVectorIndex:

def __init__(self, docs: List[DocChunk], embedder: Embedder):

self.docs = docs

self.embedder = embedder

self.doc_vecs = embedder.embed([d.text for d in docs])

def search(self, query: str, top_k: int = 10) -> List[Tuple[DocChunk, float]]:

qv = self.embedder.embed([query])[0]

scored = [(d, cosine(qv, v)) for d, v in zip(self.docs, self.doc_vecs)]

scored.sort(key=lambda x: x[1], reverse=True)

return scored[:top_k]

# -----------------------------

# Fusion: Reciprocal Rank Fusion (RRF)

# -----------------------------

def rrf_fusion(

ranked_lists: List[List[DocChunk]],

k: int = 60

) -> List[Tuple[DocChunk, float]]:

"""

ranked_lists: list of ranked doc lists (best first)

returns: fused list of (doc, rrf_score)

"""

scores: Dict[str, float] = {}

lookup: Dict[str, DocChunk] = {}

for lst in ranked_lists:

for rank, doc in enumerate(lst, start=1):

lookup[doc.id] = doc

scores[doc.id] = scores.get(doc.id, 0.0) + 1.0 / (k + rank)

fused = [(lookup[doc_id], s) for doc_id, s in scores.items()]

fused.sort(key=lambda x: x[1], reverse=True)

return fused

# -----------------------------

# Reranker (plug-in)

# -----------------------------

# Cross-encoder rerankers score (query, chunk) pairs.

# Keep the interface small so you can swap implementations.

# -----------------------------

class Reranker:

def score(self, query: str, docs: List[DocChunk]) -> List[float]:

raise NotImplementedError("Plug in your reranker here.")

# -----------------------------

# The Hybrid RAG Retriever

# -----------------------------

class HybridRAGRetriever:

def __init__(

self,

docs: List[DocChunk],

embedder: Embedder,

reranker: Reranker,

bm25_k: int = 50,

vec_k: int = 50,

fuse_k: int = 60,

rerank_top_n: int = 100,

final_k: int = 10,

):

self.docs = docs

self.bm25 = TinyBM25(docs)

self.vindex = TinyVectorIndex(docs, embedder)

self.reranker = reranker

self.bm25_k = bm25_k

self.vec_k = vec_k

self.fuse_k = fuse_k

self.rerank_top_n = rerank_top_n

self.final_k = final_k

def retrieve(self, query: str) -> List[DocChunk]:

# 1) BM25 candidates

bm25_hits = self.bm25.search(query, top_k=self.bm25_k)

bm25_docs = [d for d, _ in bm25_hits]

# 2) Vector candidates

vec_hits = self.vindex.search(query, top_k=self.vec_k)

vec_docs = [d for d, _ in vec_hits]

# 3) Fusion (RRF)

fused = rrf_fusion([bm25_docs, vec_docs], k=self.fuse_k)

fused_docs = [d for d, _ in fused[: self.rerank_top_n]]

# 4) Rerank

rerank_scores = self.reranker.score(query, fused_docs)

reranked = list(zip(fused_docs, rerank_scores))

reranked.sort(key=lambda x: x[1], reverse=True)

# 5) Final top-k

return [d for d, _ in reranked[: self.final_k]]

# -----------------------------

# Example usage (wire your own models)

# -----------------------------

# Replace DummyEmbedder + DummyReranker with real implementations.

# -----------------------------

class DummyEmbedder(Embedder):

def embed(self, texts: List[str]) -> List[List[float]]:

# Placeholder embedding: DO NOT use in production.

# This just makes the file runnable without extra dependencies.

# Real embeddings come from an embedding model.

vecs = []

for t in texts:

# Simple hash-based vector (toy)

v = [0.0] * 64

for w in t.lower().split():

v[hash(w) % 64] += 1.0

vecs.append(v)

return vecs

class DummyReranker(Reranker):

def score(self, query: str, docs: List[DocChunk]) -> List[float]:

# Placeholder reranker: scores by keyword overlap (toy).

# Real rerankers use cross-encoders.

q = set(query.lower().split())

out = []

for d in docs:

dt = set(d.text.lower().split())

out.append(len(q.intersection(dt)))

return out

if __name__ == "__main__":

chunks = [

DocChunk("1", "Hybrid RAG combines BM25 and vector search, then uses fusion and reranking."),

DocChunk("2", "BM25 is strong for exact matches, like codes, names, and short queries."),

DocChunk("3", "Vector search finds semantic matches, even when words differ."),

DocChunk("4", "Reciprocal Rank Fusion merges rankings from multiple retrievers."),

DocChunk("5", "Reranking reorders candidates using a stronger relevance model."),

]

retriever = HybridRAGRetriever(

docs=chunks,

embedder=DummyEmbedder(),

reranker=DummyReranker(),

bm25_k=5,

vec_k=5,

rerank_top_n=10,

final_k=3,

)

q = "How does hybrid RAG use BM25 and reranking?"

top = retriever.retrieve(q)

print("\nTop chunks:")

for i, d in enumerate(top, start=1):

print(f"{i}. [{d.id}] {d.text}")

What the code is doing (in plain English)

1) BM25 retrieval

The code uses a lightweight BM25 to grab chunks that match your query literally.

This is where Hybrid RAG gets precision.

2) Vector retrieval

The code uses an embedder interface to retrieve chunks by meaning.

This is where Hybrid RAG gets recall.

3) RRF fusion

Instead of fighting score scales, it merges by rank voting.

This is the safest default for Hybrid RAG systems.

4) Reranking

A reranker re-sorts the fused shortlist, selecting chunks that answer the query directly.

This is where Hybrid RAG gets truth.

The “viral” recipe: the settings that work in production

If you want Hybrid RAG that feels instantly better, this setup is a strong starting point:

- BM25 top-k: 50

- Vector top-k: 50

- Fusion: RRF with k = 60

- Rerank: top 100 candidates

- Final context: top 8–12 chunks

This is the simplest version of Hybrid RAG that consistently beats “vector-only RAG.”

Bonus: the upgrade that makes Hybrid RAG even smarter (without complexity)

Once you have Hybrid RAG running, the easiest “level up” is multi-query retrieval:

Instead of searching once, you search with 3–6 rewritten queries, then fuse the results.

This approach is popularized as RAG-Fusion and is built directly on top of fusion methods like RRF.

You don’t need to do it on day one.

But if your users ask messy, vague questions, it’s a cheat code.

Hybrid RAG is not optional anymore

If you care about accuracy, trust, and real production behavior:

- Vector-only RAG is not enough.

- Keyword-only search is not enough.

- Hybrid RAG is the stable middle ground that actually works.

Hybrid RAG gives you:

- BM25 precision

- embedding recall

- fusion robustness

- reranking quality

And that’s how you stop shipping dumb RAG.

Explore More from Tech Niche Pro

If you enjoyed this deep dive into Hybrid RAG, here are some other high-value guides from Tech Niche Pro that are worth your time:

— Build an AI Agent From Scratch in Python (No LangChain): Tools, Memory, Planning — In One Clean File — https://technichepro.com/build-an-ai-agent-from-scratch-python/

— The Next AI Boom: What’s Coming, Who Wins, and How to Prepare (2026 Edition) — https://technichepro.com/next-ai-boom-2026/

— Choose First AI Product: 10-Step Field Guide to a Useful AI App (2026) — https://technichepro.com/choose-first-ai-product-10-step-field-guide/

— AI Document Extraction: LLMs That Tame Complex PDFs — https://technichepro.com/ai-document-extraction-llms-that-tame-complex-pdfs/

Plus, for more AI trends, Python tutorials, and developer playbooks, browse the full archive at https://technichepro.com/ — your trusted source for real-world AI and tech insights.

These articles will help you extend your Python-AI skills, stay ahead in the AI revolution, and apply cutting-edge techniques to real-world problems.

Sources

- Hybrid search definition (BM25 + vector fusion): https://docs.weaviate.io/weaviate/search/hybrid?utm_source=chatgpt.com

- Hybrid search concept overview: https://docs.weaviate.io/weaviate/search/hybrid?utm_source=chatgpt.com

- Reciprocal Rank Fusion (RRF) paper: https://docs.weaviate.io/weaviate/search/hybrid?utm_source=chatgpt.com

- RAG-Fusion paper: https://docs.weaviate.io/weaviate/search/hybrid?utm_source=chatgpt.com

- HyDE paper (query-to-hypothetical-document retrieval): https://arxiv.org/abs/2212.10496?utm_source=chatgpt.com

Bonus resources:

— YouTube ▶️ https://youtu.be/GHy73SBxFLs

— Book ▶️ https://www.amazon.com/dp/B0CKGWZ8JT

Let’s Connect

Email: krtarunsingh@gmail.com

LinkedIn: Tarun Singh

GitHub: github.com/krtarunsingh

Buy Me a Coffee: https://buymeacoffee.com/krtarunsingh

YouTube: @tarunaihacks

👉 If you found value here, like, share, and leave a comment — it helps more devs discover practical guides like this.