Table of Contents

If you’ve ever tried to build an AI agent from scratch, you’ve probably seen this:

Thank you for reading this post, don't forget to subscribe!- It “thinks” forever.

- It keeps calling tools in a loop.

- It repeats itself.

- It burns tokens like a leaking pipe.

That’s not an AI agent. That’s a confused chatbot with tools.

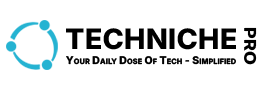

In this post, you’ll build an AI agent from scratch in Python (no LangChain, no frameworks) using a minimal agent loop that actually works:

✅ Tool calling (safe + predictable)

✅ Memory (short-term + long-term)

✅ Planning + replanning (simple, not fancy)

✅ Hard stop conditions (so it won’t spiral)

✅ A trace log you can debug and improve

By the end, you’ll have a clean, copy-paste-ready agent you can use as the base for real products.

What “AI Agent” Really Means (In Plain English)

To build an AI agent from scratch, you only need one idea:

An agent is a loop.

Plan → Act (tool) → Observe → Remember → Replan → Stop

That’s it.

A chatbot answers once.

An agent can decide what to do next, use tools, and keep going until it’s done.

The big secret is this:

A good agent is not “smart.”

A good agent is controlled.

So we’ll focus on control: structured output, tool safety, memory compression, and stop conditions.

The Agent Design (Minimal and Practical)

We will implement:

1) Tools

A tool is just a Python function with:

- a name

- a description

- a JSON schema for inputs

2) Memory

Two layers:

- Short-term memory: recent conversation + tool results

- Long-term memory: compact notes saved to disk and retrieved later

3) Planning

Two layers:

- High-level plan: 3–6 bullets

- Next action: one step (call a tool or answer)

4) Stop Conditions (This is what makes it “real”)

Hard limits:

- max steps

- max tool calls

- max time

- repeated actions detection

Soft limits:

- if no new progress for 2 steps, stop

- if plan is stable but output is ready, finalize

This is the difference between a “demo agent” and an agent people trust.

Full Working Code: Minimal AI Agent in Pure Python (No LangChain)

Copy-paste this into a single file: agent_from_scratch.py

"""

agent_from_scratch.py

------------------------------------------------------------

A minimal AI agent loop in pure Python (no LangChain).

- Tools: registry + safe execution

- Memory: short-term + long-term notes (file-based)

- Planning: simple plan + next step

- Guardrails: max steps, max tool calls, loop detection

- Debug: trace log per step

Requirements:

- Python 3.10+

- requests (pip install requests)

Environment:

- OPENAI_API_KEY (required)

- OPENAI_BASE_URL (optional, default: https://api.openai.com/v1)

- OPENAI_MODEL (optional, default: gpt-4o-mini)

"""

from __future__ import annotations

import json

import os

import time

import re

from dataclasses import dataclass, field

from typing import Any, Callable, Dict, List, Optional, Tuple

import requests

# -----------------------------

# Utilities

# -----------------------------

def now_ms() -> int:

return int(time.time() * 1000)

def clamp_text(text: str, max_chars: int = 1800) -> str:

text = text.strip()

if len(text) <= max_chars:

return text

return text[:max_chars] + " ...[truncated]"

def safe_json_extract(text: str) -> Optional[Dict[str, Any]]:

"""

The model must output a single JSON object.

This function tries to extract the first JSON object from the response safely.

"""

text = text.strip()

# Fast path: pure JSON

if text.startswith("{") and text.endswith("}"):

try:

return json.loads(text)

except Exception:

pass

# Extract first {...} block

match = re.search(r"\{.*\}", text, flags=re.DOTALL)

if not match:

return None

blob = match.group(0)

try:

return json.loads(blob)

except Exception:

return None

def simple_similarity(a: str, b: str) -> float:

"""

Tiny loop-detection heuristic: token overlap ratio.

Good enough to detect 'same action again and again'.

"""

sa = set(a.lower().split())

sb = set(b.lower().split())

if not sa or not sb:

return 0.0

return len(sa & sb) / max(1, len(sa | sb))

# -----------------------------

# Tool System

# -----------------------------

@dataclass

class Tool:

name: str

description: str

schema: Dict[str, Any]

fn: Callable[[Dict[str, Any]], Any]

safe: bool = True # keep dangerous tools behind a flag

class ToolRegistry:

def __init__(self) -> None:

self._tools: Dict[str, Tool] = {}

def register(self, tool: Tool) -> None:

if tool.name in self._tools:

raise ValueError(f"Tool already registered: {tool.name}")

self._tools[tool.name] = tool

def get(self, name: str) -> Optional[Tool]:

return self._tools.get(name)

def as_prompt_block(self) -> str:

"""

Give the model the tool list in a compact, readable form.

"""

lines = ["TOOLS AVAILABLE:"]

for t in self._tools.values():

safe_tag = "safe" if t.safe else "restricted"

lines.append(f"- {t.name} ({safe_tag}): {t.description}")

lines.append(f" schema: {json.dumps(t.schema, ensure_ascii=False)}")

return "\n".join(lines)

# -----------------------------

# Memory System

# -----------------------------

@dataclass

class MemoryNote:

ts_ms: int

text: str

tags: List[str] = field(default_factory=list)

class LongTermMemory:

"""

Simple long-term memory:

- Stores compact notes in a JSONL file

- Retrieves notes with keyword overlap (simple, fast, no embeddings)

"""

def __init__(self, path: str = "agent_memory.jsonl") -> None:

self.path = path

if not os.path.exists(self.path):

with open(self.path, "w", encoding="utf-8") as f:

f.write("")

def add(self, text: str, tags: Optional[List[str]] = None) -> None:

note = MemoryNote(ts_ms=now_ms(), text=text.strip(), tags=tags or [])

with open(self.path, "a", encoding="utf-8") as f:

f.write(json.dumps(note.__dict__, ensure_ascii=False) + "\n")

def search(self, query: str, k: int = 5) -> List[MemoryNote]:

query_tokens = set(query.lower().split())

scored: List[Tuple[float, MemoryNote]] = []

with open(self.path, "r", encoding="utf-8") as f:

for line in f:

line = line.strip()

if not line:

continue

try:

obj = json.loads(line)

note = MemoryNote(**obj)

tokens = set(note.text.lower().split())

score = len(tokens & query_tokens)

if score > 0:

scored.append((float(score), note))

except Exception:

continue

scored.sort(key=lambda x: x[0], reverse=True)

return [n for _, n in scored[:k]]

class ShortTermMemory:

"""

Keeps the last N messages (compressed).

"""

def __init__(self, max_items: int = 18) -> None:

self.max_items = max_items

self.items: List[Dict[str, str]] = []

def add(self, role: str, content: str) -> None:

self.items.append({"role": role, "content": content})

if len(self.items) > self.max_items:

self.items = self.items[-self.max_items:]

# -----------------------------

# LLM Client (simple, direct)

# -----------------------------

class LLMClient:

def __init__(self) -> None:

self.api_key = os.getenv("OPENAI_API_KEY", "").strip()

if not self.api_key:

raise RuntimeError("Missing OPENAI_API_KEY")

self.base_url = os.getenv("OPENAI_BASE_URL", "https://api.openai.com/v1").strip()

self.model = os.getenv("OPENAI_MODEL", "gpt-4o-mini").strip()

def chat(self, messages: List[Dict[str, str]], temperature: float = 0.2) -> str:

url = f"{self.base_url}/chat/completions"

headers = {"Authorization": f"Bearer {self.api_key}"}

payload = {

"model": self.model,

"temperature": temperature,

"messages": messages,

}

r = requests.post(url, headers=headers, json=payload, timeout=60)

r.raise_for_status()

data = r.json()

return data["choices"][0]["message"]["content"]

# -----------------------------

# Agent Core

# -----------------------------

@dataclass

class AgentConfig:

max_steps: int = 10

max_tool_calls: int = 6

max_seconds: int = 35

allow_restricted_tools: bool = False

@dataclass

class AgentState:

step: int = 0

tool_calls: int = 0

started_ms: int = field(default_factory=now_ms)

high_level_plan: List[str] = field(default_factory=list)

last_actions: List[str] = field(default_factory=list)

trace: List[Dict[str, Any]] = field(default_factory=list)

class ScratchAgent:

"""

A minimal agent that:

- asks the model for a plan + next action

- executes a tool if needed

- stores memory notes

- stops safely

"""

def __init__(self, llm: LLMClient, tools: ToolRegistry, ltm: LongTermMemory, cfg: AgentConfig) -> None:

self.llm = llm

self.tools = tools

self.ltm = ltm

self.cfg = cfg

self.stm = ShortTermMemory(max_items=18)

def _time_left(self, state: AgentState) -> int:

elapsed = now_ms() - state.started_ms

return max(0, self.cfg.max_seconds * 1000 - elapsed)

def _should_stop(self, state: AgentState) -> Optional[str]:

if state.step >= self.cfg.max_steps:

return "Reached max_steps"

if state.tool_calls >= self.cfg.max_tool_calls:

return "Reached max_tool_calls"

if self._time_left(state) <= 0:

return "Reached max_seconds"

# Loop detection: if we keep repeating very similar actions

if len(state.last_actions) >= 3:

a, b, c = state.last_actions[-3:]

if simple_similarity(a, b) > 0.85 and simple_similarity(b, c) > 0.85:

return "Detected repeating loop"

return None

def _compact_memory_summary(self) -> str:

"""

Summarize short-term memory in a compact form the agent can carry.

"""

# Keep it simple: last 8 items only, compressed.

tail = self.stm.items[-8:]

lines = []

for it in tail:

role = it["role"]

content = clamp_text(it["content"], 260).replace("\n", " ")

lines.append(f"{role}: {content}")

return "\n".join(lines)

def _build_system_prompt(self, user_goal: str) -> str:

"""

The strongest part: strict output format + clear behavior.

"""

return f"""

You are a strict AI agent. Your job is to complete the user's goal safely and efficiently.

USER GOAL:

{user_goal}

RULES:

- You MUST respond with exactly ONE JSON object and nothing else.

- Choose ONE action per step.

- If you have enough info, finish with a final answer.

- Keep plans short and simple.

- Avoid infinite loops. If stuck, explain what is missing and finish.

OUTPUT JSON SCHEMA:

{{

"type": "plan" | "tool_call" | "final",

"plan": ["..."] (required if type="plan"),

"next": "... one sentence next step ..." (required if type="plan"),

"tool": "tool_name" (required if type="tool_call"),

"args": {{...}} (required if type="tool_call"),

"answer": "... final answer ..." (required if type="final"),

"memory_note": "... short durable note to store ..." (optional)

}}

AVAILABLE TOOLS:

{self.tools.as_prompt_block()}

""".strip()

def _model_step(self, user_goal: str, state: AgentState) -> Dict[str, Any]:

ltm_hits = self.ltm.search(user_goal, k=4)

ltm_block = "\n".join([f"- {clamp_text(n.text, 240)}" for n in ltm_hits]) or "- (none)"

system = self._build_system_prompt(user_goal)

context = f"""

SHORT-TERM MEMORY (compact):

{self._compact_memory_summary()}

LONG-TERM MEMORY (top hits):

{ltm_block}

CURRENT PLAN:

{state.high_level_plan if state.high_level_plan else "(not set)"}

STEP: {state.step}

TOOL_CALLS: {state.tool_calls}

TIME_LEFT_MS: {self._time_left(state)}

""".strip()

messages = [

{"role": "system", "content": system},

{"role": "user", "content": context},

]

raw = self.llm.chat(messages, temperature=0.2)

obj = safe_json_extract(raw)

if not obj:

# fallback: force finish to avoid chaos

return {"type": "final", "answer": clamp_text(raw, 900)}

return obj

def _run_tool(self, name: str, args: Dict[str, Any]) -> Dict[str, Any]:

tool = self.tools.get(name)

if not tool:

return {"ok": False, "error": f"Unknown tool: {name}", "data": None, "latency_ms": 0}

if (not tool.safe) and (not self.cfg.allow_restricted_tools):

return {"ok": False, "error": f"Tool is restricted: {name}", "data": None, "latency_ms": 0}

t0 = now_ms()

try:

out = tool.fn(args)

return {"ok": True, "error": None, "data": out, "latency_ms": now_ms() - t0}

except Exception as e:

return {"ok": False, "error": str(e), "data": None, "latency_ms": now_ms() - t0}

def run(self, user_goal: str) -> str:

state = AgentState()

self.stm.add("user", user_goal)

while True:

stop_reason = self._should_stop(state)

if stop_reason:

final = f"I’m stopping safely: {stop_reason}.\n\nWhat I can do next: clarify missing info or reduce scope."

self.stm.add("assistant", final)

return final

state.step += 1

decision = self._model_step(user_goal, state)

dtype = decision.get("type", "").strip()

trace_item: Dict[str, Any] = {

"step": state.step,

"decision": decision,

"tool_result": None,

}

# Store memory note (if provided)

mem_note = (decision.get("memory_note") or "").strip()

if mem_note:

self.ltm.add(mem_note, tags=["agent_note"])

if dtype == "plan":

plan = decision.get("plan") or []

if isinstance(plan, list) and plan:

state.high_level_plan = [str(x)[:140] for x in plan][:6]

nxt = str(decision.get("next") or "").strip()

state.last_actions.append("plan:" + nxt)

self.stm.add("assistant", f"PLAN: {state.high_level_plan}\nNEXT: {nxt}")

state.trace.append(trace_item)

continue

if dtype == "tool_call":

tool_name = str(decision.get("tool") or "").strip()

args = decision.get("args") or {}

if not isinstance(args, dict):

args = {}

state.tool_calls += 1

action_sig = f"tool:{tool_name} args:{json.dumps(args, sort_keys=True)}"

state.last_actions.append(action_sig)

result = self._run_tool(tool_name, args)

trace_item["tool_result"] = result

state.trace.append(trace_item)

obs = {

"tool": tool_name,

"ok": result["ok"],

"error": result["error"],

"data": clamp_text(json.dumps(result["data"], ensure_ascii=False), 1200) if result["ok"] else None,

"latency_ms": result["latency_ms"],

}

self.stm.add("assistant", f"TOOL_OBSERVATION: {json.dumps(obs, ensure_ascii=False)}")

continue

# Final answer

answer = str(decision.get("answer") or "").strip()

if not answer:

answer = "Done."

self.stm.add("assistant", answer)

return answer

# -----------------------------

# Tools (safe, useful)

# -----------------------------

def tool_calc(args: Dict[str, Any]) -> Any:

expr = str(args.get("expression", "")).strip()

if not expr:

raise ValueError("Missing expression")

# Very small safe evaluator: numbers + operators only

if not re.fullmatch(r"[0-9\.\+\-\*\/\(\)\s]+", expr):

raise ValueError("Expression contains unsupported characters")

return eval(expr, {"__builtins__": {}}, {})

def tool_summarize(args: Dict[str, Any]) -> Any:

text = str(args.get("text", "")).strip()

max_lines = int(args.get("max_lines", 6))

text = clamp_text(text, 3000)

lines = [ln.strip() for ln in text.splitlines() if ln.strip()]

# Simple heuristic summary: take first lines + key bullets

out = []

for ln in lines[:max_lines]:

out.append(ln[:180])

return out

def tool_read_file(args: Dict[str, Any]) -> Any:

path = str(args.get("path", "")).strip()

if not path:

raise ValueError("Missing path")

# Basic sandbox: block parent traversal

if ".." in path.replace("\\", "/"):

raise ValueError("Parent traversal is not allowed")

with open(path, "r", encoding="utf-8") as f:

return clamp_text(f.read(), 6000)

def build_tools() -> ToolRegistry:

reg = ToolRegistry()

reg.register(Tool(

name="calc",

description="Evaluate a simple math expression safely (numbers + + - * / parentheses).",

schema={"type": "object", "properties": {"expression": {"type": "string"}}, "required": ["expression"]},

fn=tool_calc,

safe=True,

))

reg.register(Tool(

name="summarize",

description="Create a short bullet summary from text (fast heuristic).",

schema={"type": "object", "properties": {"text": {"type": "string"}, "max_lines": {"type": "integer"}}, "required": ["text"]},

fn=tool_summarize,

safe=True,

))

reg.register(Tool(

name="read_file",

description="Read a local text file (sandboxed; blocks .. traversal).",

schema={"type": "object", "properties": {"path": {"type": "string"}}, "required": ["path"]},

fn=tool_read_file,

safe=False, # file access is restricted by default

))

return reg

# -----------------------------

# Demo

# -----------------------------

if __name__ == "__main__":

llm = LLMClient()

tools = build_tools()

ltm = LongTermMemory(path="agent_memory.jsonl")

cfg = AgentConfig(

max_steps=10,

max_tool_calls=6,

max_seconds=35,

allow_restricted_tools=False,

)

agent = ScratchAgent(llm=llm, tools=tools, ltm=ltm, cfg=cfg)

goal = (

"Create a short plan to write a Medium post about building an AI agent from scratch in Python "

"with tools, memory, planning, and stop conditions. Then produce the final outline."

)

print(agent.run(goal))

Why This “From Scratch” Agent Actually Works

If you want your post to go viral, this is the part readers remember:

1) It forces structured JSON output

Agents break when outputs are messy. Here, the model must choose:

plantool_callfinal

That one decision removes 80% of agent chaos.

2) It treats tool outputs as observations

The agent doesn’t “guess.”

It acts, observes, and updates memory.

3) It has real stop conditions

Most “agent from scratch” tutorials ignore this.

But stop conditions are what make your agent reliable.

If your agent can’t stop, it’s not an agent — it’s a runaway process.

4) Memory is compact by design

Short-term memory is trimmed.

Long-term memory stores only durable notes.

This prevents context bloat, which is the silent killer of agent performance.

How to Make This Agent Feel “Smart” Without Making It Complex

If you want to build an AI agent from scratch that feels impressive to readers, add these upgrades next:

Upgrade A: Memory Compression (Token Saver)

Every 4–5 steps, replace the “chat history” with a tight summary:

- what we know

- what we tried

- what’s next

This makes agents faster, cheaper, and less repetitive.

Upgrade B: Reflection (One Extra Pass)

After the agent produces a final answer, do one more call:

- “Find weaknesses”

- “Fix them once”

That single loop makes output quality jump.

Upgrade C: Better Tool Contracts

Return tool results in a consistent envelope:

- ok

- data

- error

- latency

Your agent becomes debuggable and production-friendly.

The Viral Angle: The “Agent Loop Problem” (And the Fix)

Here’s a simple truth most people don’t say clearly:

Agents fail because they don’t know when to stop.

Your readers have felt this pain.

So make the “viral hook” crystal clear:

- “My agent kept looping and burned tokens.”

- “I fixed it with four guardrails.”

- “Here is the exact code.”

That’s shareable. That gets claps.

Real Use Cases Readers Will Share

If you want max claps and shares, tie your build AI agent from scratch tutorial to a real-life workflow people want:

1) Repo Triage Agent

- reads error logs

- suggests root cause

- proposes patch plan

2) Document Agent

- reads PDFs / contracts / policies

- extracts facts

- creates a clean summary

3) Research → Write → Verify Agent

- gathers info (from your own sources)

- drafts content

- checks gaps

- improves clarity

Pick one and add a short demo story around it. Stories beat theory.

Final Words (And Your Next Step)

If you came here to build an AI agent from scratch, you now have the cleanest foundation:

- tools

- memory

- planning

- guardrails

- simple Python

- no frameworks

This is not “toy code.”

This is a solid base you can turn into a product.

If you want more practical AI + Python guides, I also write on my blog:

- Tech Niche Pro (main): https://technichepro.com/

- Build Wealth on a Salary (simple system): https://technichepro.com/build-wealth-on-a-salary-simple-system/

- AI Smart Career 2026: https://technichepro.com/ai-smart-career-2026/

- The Next AI Boom 2026: https://technichepro.com/next-ai-boom-2026/

If this helped you, share it with one builder friend who’s struggling with agent loops.

Bonus resources:

— YouTube ▶️ https://youtu.be/GHy73SBxFLs

— Book ▶️ https://www.amazon.com/dp/B0CKGWZ8JT

Let’s Connect

Email: krtarunsingh@gmail.com

LinkedIn: Tarun Singh

GitHub: github.com/krtarunsingh

Buy Me a Coffee: https://buymeacoffee.com/krtarunsingh

YouTube: @tarunaihacks

👉 If you found value here, clap, share, and leave a comment — it helps more devs discover practical guides like this.

More Builds You’ll Love

On-Device AI Is Finally Real — Build a Copilot+ PC App That Runs 100% Offline

On-device AI, explained in plain English — with a full working project you can run today.pub.towardsai.net

Laptop-Only LLM: Tune Google Gemma 3 in Minutes (Code Inside)

A clean, from-scratch walkthrough (with code) to tune a 270M-param LLM on chess — no cloud required.pub.towardsai.net

Build an AI PDF Search Engine in a Weekend (Python, FAISS, RAG — Full Code)

Turn messy folders of PDFs into a blazing-fast, AI-assisted knowledge base you can actually talk to.pub.towardsai.net

The Next AI Boom: What Comes After AI Agents and Agentic AI?

Artificial Intelligence is no longer science fiction. It’s a living, breathing force that’s already transforming how we…medium.com

AI-Powered OCR with Phi-3-Vision-128K: The Future of Document Processing

In the fast-evolving world of artificial intelligence, multimodal models are setting new standards for integrating…ai.gopubby.com

RAG Frameworks Explored: LlamaIndex vs. LangChain for Next-Gen LLMs

The Rise of Large Language Models (LLMs)ai.gopubby.com

Mastering RAG Chunking Techniques for Enhanced Document Processing

Dividing large documents into smaller parts is a crucial yet intricate task that significantly impacts the performance…ai.gopubby.com

📖 Ready to rebuild with me?

Rebuild: Your next version is only one decision away

Rebuild: Your next version is only one decision away

Rebuild: Your next version is only one decision away [Singh, Tarun] on Amazon.com. *FREE* shipping on qualifying…www.amazon.com