Table of Contents

The Next AI Boom is the question on every founder’s whiteboard, research roadmap, and enterprise strategy deck. We’ve seen AI agents and agentic AI leap from lab demos to real workflows—planning tasks, invoking tools, and closing the loop with minimal human intervention. But the NEXT AI BOOM won’t be about a single model or feature. It will be about systems: orchestrated ecosystems of models, data, tools, and governance, designed to operate reliably in the messy, multi-modal, multi-stakeholder real world.

Thank you for reading this post, don't forget to subscribe!This long-form guide deepens each frontier you asked for—autonomous AI ecosystems, lifelong learning, AI-powered software engineering, multimodal AI, digital twins, neuro-AI & BCI, AI for scientific discovery, and the trust stack—with practical examples, architectural patterns, KPIs, risks, and implementation checklists. Use it as your evergreen playbook to ideate products, prioritize R&D, and speak to investors or leadership about where value will concentrate next.

AI agents and agentic AI dominated 2024, but the next boom will build on advancements like GPT-5, which expands reasoning, multi-modal abilities, and tool integration. For a full breakdown, check out this GPT-5 Upgrade Guide.

AUTONOMOUS AI ECOSYSTEMS: THE OPERATING SYSTEM FOR THE NEXT AI BOOM

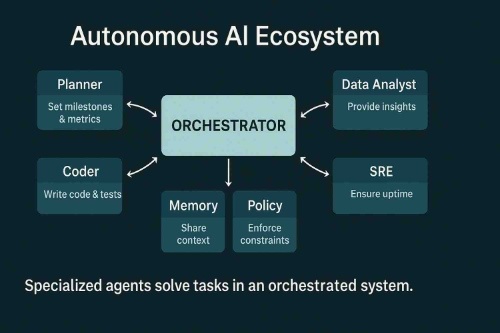

Core idea (deeper): Single agents solve narrow tasks; autonomous AI ecosystems solve processes. Picture a graph of specialized agents—planner, coder, tester, data analyst, growth analyst, SRE—coordinated by an orchestrator that routes tasks, maintains shared context, and enforces budget, latency, and policy constraints. This is the NEXT AI BOOM as an operating system: workflows as first-class citizens.

Architecture pattern:

- Planner (global agent): converts a high-level goal into a plan with milestones and metrics.

- Workers (specialists): code-gen, retrieval, analytics, QA, compliance.

- Memory layer: vector store + structured memory (decisions, assumptions, artifacts).

- Tooling mesh: repo, CI, issue tracker, docs, DB, observability, billing.

- Policy guardrails: red-team prompts, allowlists/denylists, PII defense, rate & spend caps.

- Human-in-the-loop: approval thresholds, escalation policies.

KPIs to watch: end-to-end cycle time (idea→PR→deploy), quality metrics (test pass rate, incident count), unit economics (tokens/CPU per deliverable), compliance checklist pass rate.

Monetization paths: “Agent-as-a-Service” for verticals, orchestration platforms, domain skill packs (e.g., FinOps, RevOps, MLOps), SLAs for reliability and governance.

Risks & mitigations: emergent behavior → sandboxing and staged rollouts; prompt injection → input sanitization & tool allowlists; provenance ambiguity → immutable logs.

LIFELONG LEARNING AI: CONTINUAL ADAPTATION WITHOUT CATASTROPHIC FORGETTING

Why it anchors the NEXT AI BOOM: Models trained once and deployed “frozen” fall behind. Lifelong learning (a.k.a. continual learning) lets systems update safely as data drifts, rules change, or users evolve—without throwing away previous skills.

Approaches (practical):

- Externalized memory first: keep core weights stable; store new knowledge in retrieval layers (RAG) + structured memory.

- Periodic fine-tune with safety rails: batch updates with eval gates and rollback.

- Adapters/LoRA layers: cheaply add capabilities without retraining the base.

- Federated or on-device learning: update locally to respect privacy/regulation.

- Governed adaptation: every weight or policy change must pass eval suites (toxicity, bias, robustness).

KPIs: regret (error over time), on-policy accuracy, drift detection latency, rollback frequency, safety-eval pass rate.

Playbook: start with RAG + policy memory → add adapters for recurring patterns → evaluate quarterly weight updates → automate drift alerts.

AI-POWERED SOFTWARE DEVELOPMENT: FROM COPILOTS TO AUTONOMOUS ENGINEERS

From helpful to hands-on: The NEXT AI BOOM is a move from “suggest code” to “ship software”. That means closed-loop capability: interpret specs, scaffold projects, implement features, generate tests, run linters, open PRs, respond to review, and handle deploys.

Reference workflow:

- Intake: spec in natural language + architecture constraints.

- Planning: milestone plan, acceptance criteria, metrics.

- Implementation: code generation with repo context, style guides, and security rules.

- Validation: unit/integration tests, static analysis, vulnerability scan.

- Review & PR: structured diffs, explanations, mitigation notes.

- Release: canary deploy, telemetry hooks, rollback plan.

- Post-release: feedback loop to improve prompts, tools, memory.

KPIs: PR time to merge, test coverage delta, post-release incident rate, rework %, maintainer trust score.

Risks: insecure code, jailbreak prompts via issue text, hidden test flakiness. Mitigate with gated permissions, secrets scanning, SBOM, reproducible builds, and human approval for risky actions.

MULTIMODAL AI: FROM SINGLE-SENSE TO FULL-STACK PERCEPTION

Why it’s central to the NEXT AI BOOM: Real-world tasks are multimodal: text, images, video, audio, logs, sensor data. Multimodal AI fuses these into coherent models of context and intent, enabling assistants that “see,” “listen,” and “read,” not just “chat.”

Use cases (expanded):

- Ops & security: correlate surveillance video, badge logs, incident tickets, and network telemetry; generate human-readable incident narratives and action plans.

- Learning: tutors that adjust to pupil’s tone, pace, facial cues, and code errors.

- Support & field service: technicians live-stream equipment, the model reads manuals and labels, then issues a safe, step-wise guide.

- Healthcare: combine medical imaging with clinical notes to create summaries for rounds.

Implementation stack: video frames → vision encoders; audio → ASR & prosody; text → LLM; sensor → time-series; fusion layer aligns modalities; policy layer filters PII; explanation layer cites evidence.

KPIs: task success rate, hallucination-to-evidence gap, turnaround time per incident, operator override rate.

AI-DRIVEN DIGITAL TWINS: DECIDE IN THE TWIN, EXECUTE IN THE REAL

From dashboards to decision engines: Digital twins become AI-driven—they don’t just simulate; they propose actions, evaluate trade-offs, and watch outcomes in the real system.

Examples (deeper):

- Supply chain: simulate routes, fuel, ETAs, and stock-outs; choose the plan minimizing cost and delay risk under constraints.

- Smart grids: predict demand, schedule storage, hedge risk under weather uncertainty.

- Personalized medicine: patient-specific twins to preview likely responses and side-effects.

Build pattern: data connectors → physics/simulation engine → ML surrogate models (fast what-ifs) → decision policy (constrained optimization or agent) → A/B or canary execution → feedback loop.

KPIs: forecast accuracy, cost savings, downtime avoided, policy regret, safety violations (must be zero).

Guardrails: transparency for regulated contexts, strict separation of simulation vs. execution, signed decision logs.

NEURO-AI & BRAIN-COMPUTER INTERFACES: A NEW I/O FOR THE NEXT AI BOOM

Why it’s profound: BCI—interpreting and stimulating neural activity—moves AI from screens and keyboards to thought-level interfaces and motor restoration. Near-term wins: communication for locked-in patients, assistive control for prosthetics, adaptive neuro-feedback for attention or stress.

Product directions:

- Assistive speech: imagined-speech decoding to text/audio.

- Hands-free computing: cursor & UI control for accessibility and AR/VR.

- Cognitive support: memory cues, learning reinforcement (ethically supervised).

Constraints: ethics, privacy, informed consent, medical-grade reliability, latency under control.

KPIs: decoding accuracy, words-per-minute, false-activation rate, user comfort, safety incidents (zero tolerance).

AI FOR SCIENTIFIC DISCOVERY: AUTONOMOUS LABS AND HYPOTHESIS ENGINES

From analysis to agency: The NEXT AI BOOM in science is agentic research loops: read literature, propose hypotheses, plan experiments, run sims, analyze, and propose next steps—human scientists steering the loop.

High-impact domains:

- Drug discovery: generative molecules → docking sims → lab prioritization.

- Materials: alloy/composite design for heat, corrosion, or cost constraints.

- Climate & energy: parameter sweeps for mitigation strategies.

Engineering pattern: knowledge graph + LLM planner + domain simulators + Bayesian optimization + lab robotics + governance.

KPIs: hits per experiment, cycle time from idea to validated result, novelty vs. prior art, reproducibility score.

Risk posture: avoid paper-overfitting; mandate independent replication; watermark synthetic data; disclose model limitations.

TRUST, SAFETY, AND EXPLAINABLE AI: THE NON-NEGOTIABLE TRUST STACK

Why the trust stack fuels the NEXT AI BOOM: As autonomy rises, trust becomes the gating factor for adoption in finance, healthcare, education, and public sector.

Trust stack layers:

- Data lineage: where did facts, fine-tunes, and prompts come from?

- Eval suite: robustness, bias/fairness, toxicity, jailbreak resistance, PII safety.

- Explainability: rationales, citations, confidence, counterfactuals.

- Controls: rate limits, budget caps, permissions, audit trails, red-team tests.

- Governance: policies mapped to regulation; third-party audits; incident response.

KPIs: eval pass rate per release, time-to-detection for regressions, audit readiness score, incident MTTR.

Business impact: the organizations that master governance will deploy faster, face fewer rollbacks, and win enterprise trust.

PYTHON MINI-EXAMPLE: A MORE COMPLETE MULTI-AGENT ORCHESTRATION SKELETON

This toy shows how a planner breaks a goal into tasks, routes them to specialists, logs artifacts, and enforces simple guardrails. Replace handlers with your tools (GitHub, DB, CI/CD, observability) and add retrieval/memory.

# next_ai_boom_orchestrator.py (toy skeleton)

from dataclasses import dataclass, field

from typing import Callable, Dict, List, Any

import time, uuid

@dataclass

class Artifact:

id: str

kind: str

content: Any

meta: Dict[str, Any]

@dataclass

class Agent:

name: str

skill: str

handler: Callable[[str, Dict[str, Any]], Artifact]

def act(self, task: str, ctx: Dict[str, Any]) -> Artifact:

return self.handler(task, ctx)

@dataclass

class Orchestrator:

agents: List[Agent]

policy: Dict[str, Any] = field(default_factory=lambda: {"max_steps": 20, "budget_tokens": 1_000_000})

memory: List[Artifact] = field(default_factory=list)

def plan(self, goal: str) -> List[str]:

# naive planner; replace with LLM planning

steps = ["requirements: " + goal, "code: implement", "test: add coverage", "deploy: staged rollout"]

return steps

def route(self, step: str) -> Agent:

for a in self.agents:

if a.skill in step:

return a

return self.agents[0]

def run(self, goal: str) -> List[Artifact]:

steps = self.plan(goal)

outputs = []

for i, step in enumerate(steps, 1):

if i > self.policy["max_steps"]:

break

agent = self.route(step)

art = agent.act(step, {"iteration": i, "goal": goal})

self.memory.append(art); outputs.append(art)

return outputs

# Handlers (replace with real tools or LLM calls)

def reqs_handler(task, ctx):

return Artifact(str(uuid.uuid4()), "spec",

{"acceptance_criteria": ["endpoint exists", "latency < 200ms"]},

{"task": task, "ts": time.time()})

def code_handler(task, ctx):

return Artifact(str(uuid.uuid4()), "diff",

{"files": ["app/api.py", "tests/test_api.py"], "summary": "added endpoint + tests"},

{"task": task, "ts": time.time()})

def test_handler(task, ctx):

return Artifact(str(uuid.uuid4()), "test_report",

{"passed": 12, "failed": 0, "coverage_delta": "+8%"},

{"task": task, "ts": time.time()})

def deploy_handler(task, ctx):

return Artifact(str(uuid.uuid4()), "deploy",

{"strategy": "canary", "rollback": "auto-on-error"},

{"task": task, "ts": time.time()})

if __name__ == "__main__":

team = Orchestrator([

Agent("SpecBot", "requirements", reqs_handler),

Agent("CodeBot", "code", code_handler),

Agent("TestBot", "test", test_handler),

Agent("OpsBot", "deploy", deploy_handler),

])

results = team.run("add export endpoint")

for r in results:

print(f"{r.kind.upper()} :: {r.content}")How to productionize this skeleton:

- Swap naive

plan()for a planner agent (LLM + tool calls). - Add retrieval (docs, code, tickets) + structured memory.

- Connect to GitHub (PRs), CI (tests), observability (SLIs/SLOs).

- Enforce policy (budgets, permissions) and audit logs.

- Build dashboards for cycle time, defects, cost, and trust metrics.

HOW THESE FRONTS REINFORCE THE NEXT AI BOOM

- Autonomous ecosystems need multimodal AI to ground decisions in rich signals, and lifelong learning to adapt.

- Digital twins accelerate when fed by multimodal perception and tuned by autonomous research loops.

- BCI becomes viable at scale only with the trust stack—governance, safety, and explainability.

- AI-powered software dev multiplies progress across all domains by shrinking the build-measure-learn cycle.

WHAT THE NEXT AI BOOM MEANS FOR YOU (ACTIONABLE PLAYBOOK)

For builders (startups/teams):

- Ship a thin vertical: one painful workflow automated end-to-end with agents + trust.

- Treat agents like micro-services: versioned, tested, observable.

- Establish guardrails from day one: allowlists, budgets, red-team prompts.

For leaders (product/execs):

- Fund a multi-quarter roadmap: orchestration + governance + measurement.

- Align on risk tiers: which steps can be fully automated vs. require human sign-off.

- Decide on platform vs. app: are you building a product or a capability layer?

For researchers & data teams:

- Prototype autonomous research loops with domain simulators.

- Build eval farms: nightly runs that grade robustness, bias, and regressions.

- Curate ground-truth data and establish clear provenance.

For everyone:

- Invest in human skills that pair well with AI: problem framing, ethics, systems thinking, communication.

FAQ — NEXT AI BOOM

IS AGENTIC AI THE FINAL DESTINATION?

No. Agentic AI is a milestone. The NEXT AI BOOM is the system-of-systems era: coordinated ecosystems, governed adaptation, and evidence-backed decisions.

WHICH FRONTIER WILL COMMERCIALIZE FASTEST?

Expect multimodal AI and AI-driven digital twins to lead short-term ROI in ops, support, manufacturing, logistics, and energy—because they tie directly to measurable savings and resilience.

HOW DO WE REDUCE RISK WHILE MOVING FAST?

Adopt the trust stack early—evals, explainability, permissions—so rollouts are controlled, auditable, and reversible.

HOW SHOULD SMALL TEAMS COMPETE?

Pick a narrow vertical with annoying manual glue work; out-execute with agentic orchestration + great UX + obsessive reliability.

TL;DR — NEXT AI BOOM

The NEXT AI BOOM will be defined by orchestrated ecosystems of agents, continual learning, multimodal perception, AI-driven digital twins, neuro-AI interfaces, autonomous science, and a trust stack that makes it all deployable in the real world. Teams that master orchestration + governance will build faster, safer, and more defensible products.

FINAL CALL-TO-ACTION

- Bookmark this NEXT AI BOOM playbook and share it with your team.

- Pick one workflow and prototype an end-to-end agentic system this week.

- Add trust & measurement from day one—because reliability is your moat.

Want a follow-up post with a GitHub starter kit (planner + memory + tools + policy + dashboards) you can clone and run? Say the word, and I’ll ship a minimal stack tailored to Tech Niche Pro.

Further Reading on Tech Niche Pro

- Build Wealth on a Salary — The Simple 5–7 Hour System

- AI Document Extraction from Complex PDFs

- Choose Your First AI Product

- AI Careers 2026: What Skills Will Matter

- Million-Dollar AI SaaS with FastAPI + GPT-4

Attributions & further reading

- Stanford HAI — AI Index 2025 (usage, cost, trends). Stanford HAIhai-production.s3.amazonaws.com

- Multimodal AI market outlook (growth indicators). Global Market Insights Inc.

- Digital twin growth projections (industry adoption signals). Fortune Business InsightsMarketsandMarketsThe Business Research Company

- BCI advances (speech restoration & decoding). National Institutes of Health (NIH)Berkeley Engineering

- Agentic AI definitions & context. University of CincinnatiTrend Micro